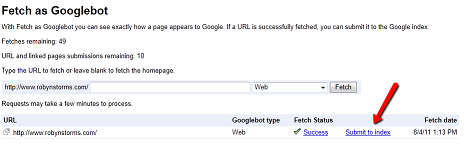

Google has announced one more way to help site owners request that a specific web page is crawled. The Fetch as Googlebot feature in Webmaster Tools has been around for a while but now makes it possible for site owners to submit a new or updated URL for indexing. The process is simple and doesn’t require users to start at the beginning of the crawling process. After you fetch a URL, and if the fetch is successful, you will now see the option to submit the URL to the Google index.

A URL will typically be indexed within a day, but this doesn’t mean that every URL submitted will be indexed. Once a page has been crawled Google will evaluate whether or not a URL belongs in their index. As with any type of discovery, ie: XML sitemap, internal and external links, RSS feeds, etc; Google goes through another process to determine whether to keep the page in their index.

In addition to this update, Google has also updated the public “Add your URL to Google” form. It is now called the “Crawl URL form” and doesn’t require owner verification, but does still limit you to submitting 50 URLs per week.

I am Susan Hannan from Exams Key; it gives 100% NS0-502 exam questions exams. Let’s take benefit of ISEB-PM1 exam questions Exams material efficiently and get guaranteed success. Check out free demo of all certifications Exam.